Introduction

Are you a JavaScript developer and interested in building AI agents or LLM apps? Do you need to stream the LLM responses from your server to the client? I recently needed to do exactly that and thought I'd share what I learned along the way.

In this post I will cover the concept of streaming OpenAI messages from a node server to a client. LLMs are slow and streaming messages to the user helps to cover up this fact. The key concepts I'll demonstrate are: generator functions, server-sent events and EventSources in Node.js.

I've built a simple chatbot app with all these concepts that you can check out here. I built it with Nuxt, so I had a server out of the box. The examples below will also use Nuxt. I've tried to keep them simple so they can be applied to any framework or vanilla app.

Let's start on the server.

⚙️ Generator Functions

Generator functions are special types of functions that return an iterator. You can pause and resume their execution and they are great for handling async operations.

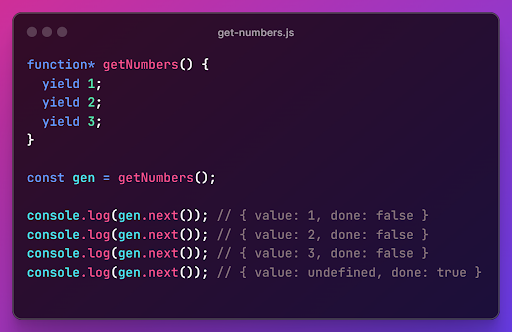

Defined with an asterisk after the function keyword:

What's going on here?

Generator functions return an iterator, which is an object that contains methods like `next()` `return` and `throw`. It also has a state. When we invoke the `next()` method for the first time, the state updates to `Running` and then returns the first yielded value (in this case: 1). In the example above, the fourth time you call `gen.next()`, it will return `{ value: undefined, done: true }`. This is because the generator function `getNumbers` only yields three values (1, 2, and 3). After the third yield, there are no more yield statements, so the generator function is done, and `gen.next()` returns an object with value as `undefined` and `done` as `true`. This is because generator functions implicitly return `undefined`.

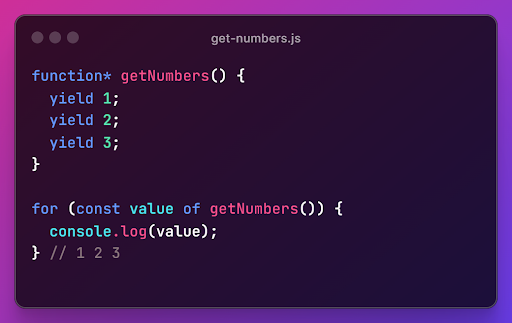

For the purposes of this post however, the cool thing about generator functions is that you can iterate over them with a for loop:

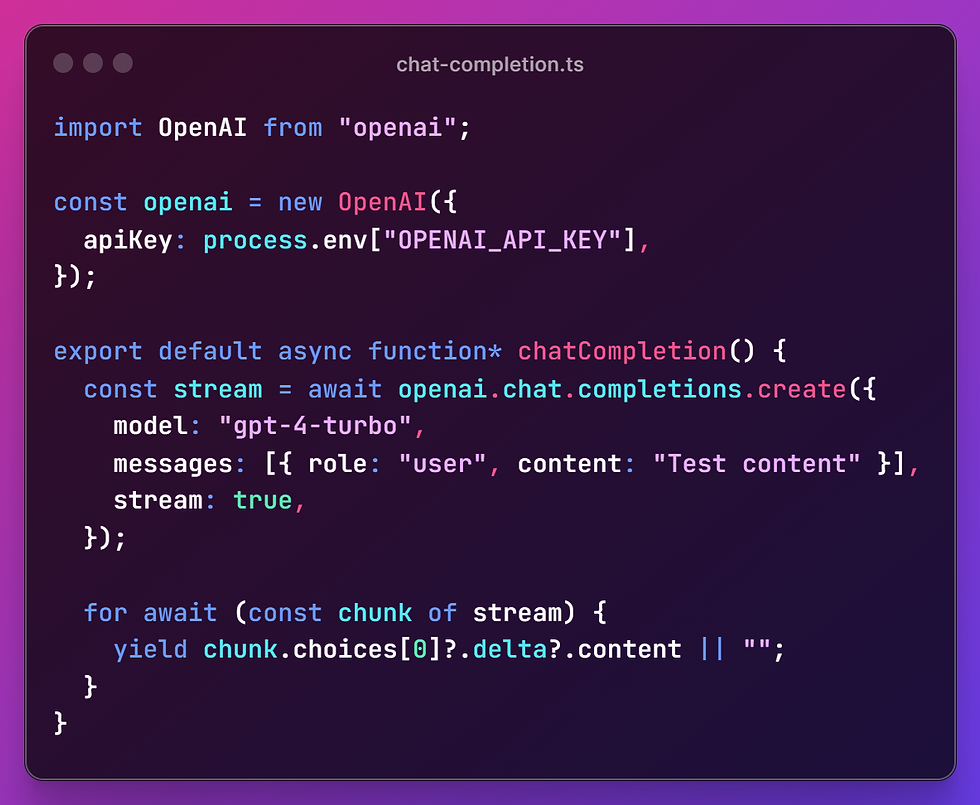

And this is super handy when it comes to streaming responses from an LLM. I'm using the node OpenAI library for this example:

↗️ SERVER-SENT EVENTS

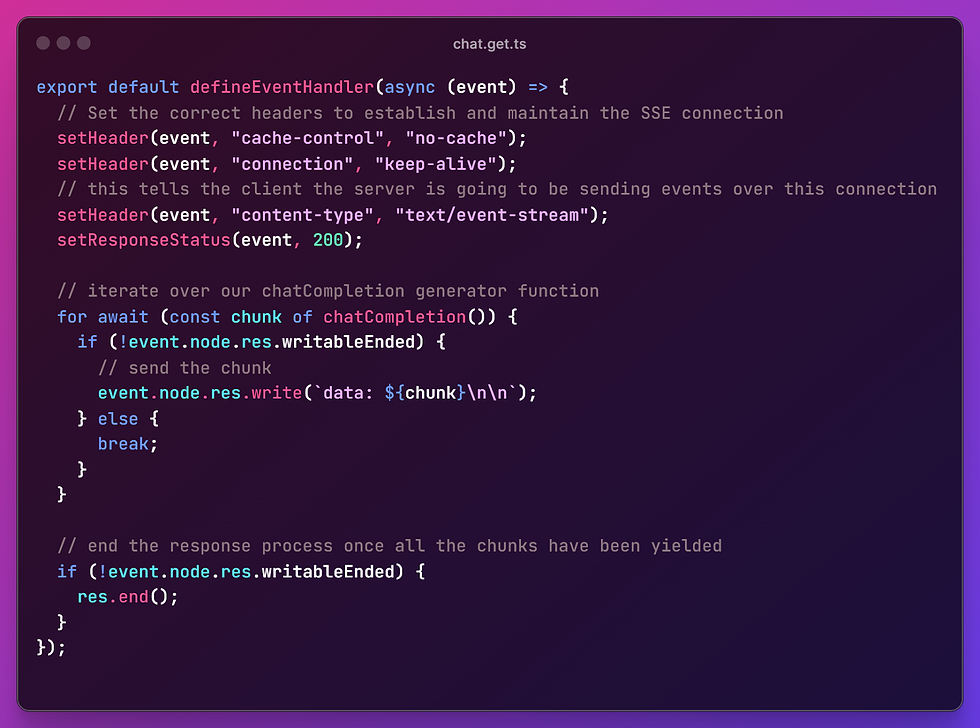

Server-sent events (SSE) allows you to push real-time updates to a client over a persistent HTTP connection. Useful for live updates like sports scores, news feeds etc. We can use SSE in conjunction with our `chatCompletion` generator function to stream the chunks of text to a client.

I'm using Nuxt's Nitro server for my example, how you set headers may differ depending on the framework you're using. First we set the correct headers. Then we can iterate over our chatCompletion generator function. It's important to note that SSE responses should be sent in a specific format. Each message should be prefixed with "data: " and suffixed with two newline characters "\n\n".

We now have what we need on our server. I've left out error handling to keep these examples as simple as possible. Let's look at the client.

⚡ Event Sourcing

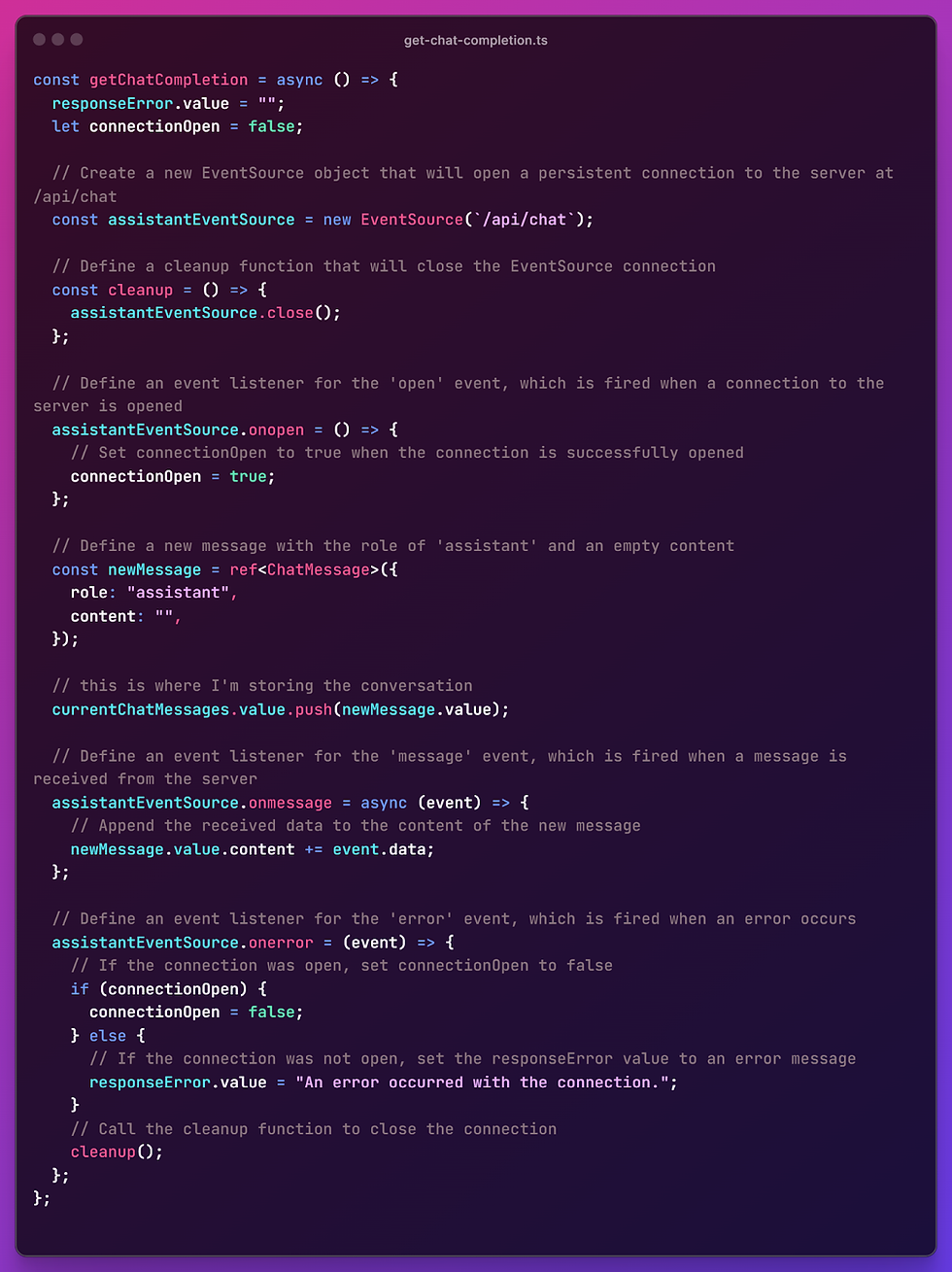

We can now use an event source in our client to listen and use the events that our SSE endpoint is pushing. I'll use the standard `EventSource` api:

And this should work. All you need to do now is create your UI, handle errors and you've got yourself a basic AI chatbot with streamed responses!

Conclusion

Integrating real-time chat functionalities with server-sent events and generator functions in a Node environment provides a powerful way to enhance user experience in web applications. Whether you are looking to develop an AI-driven chat interface or simply stream data efficiently from server to client, the concepts above should give you a solid foundation to work from. The combination of server-sent events and the asynchronous control afforded by generator functions creates a seamless and interactive user experience. I hope this has been useful - there may be better ways to do the same thing but this is how I figured it out. Nothing beats learning through doing!

The team at Text Alchemy used these concepts to help build the development server for our open-source prompt-chaining library RetortJS.

Check out my repo if you'd like to see the full code. I'd love to hear alternative strategies or problems you have faced using any of these techniques.

Commenti